I'm following the steps of SGD, but unsure if I am interpreting the steps correctly.

Let's say there are two w terms:

where x is tensors and w are two logistic function params.

and the goal is to find argmin(lambda)

And SGD formula is given as:

This is the code I have so far, with library constraints:

import numpy as np

import torch

some_data = torch.rand(1000, 2)

x = some_data[:,0]

y = (x + 0.4 * some_data[:,1] > 0.5).to(torch.int) # doesn't work with bool?

x = torch.split(x, 3) # batch

y = torch.split(y, 3)

# optim problem

w1 = torch.autograd.Variable(torch.tensor([0.1]), requires_grad=True)

w2 = torch.autograd.Variable(torch.tensor([0.1]), requires_grad=True)

lambda_min = torch.zeros(1)

for epoch in range(10):

#print(f'epoch: ', epoch)

for xx, yy in zip(x, y):

p_x = 1 / (1 + torch.exp(-w1 - (torch.mul(w2, x))))

# NLL

lambda = torch.sum(yy * torch.log(p_x) + (1 + yy) * torch.log(1 - p_x))

# Gradient???

if lambda < lambda_min :

lambda_min = lambda # Q: loss value, right?

# Q: update params...?

w2 = w1 - torch.mul(0.01, lambda_min)

print(f'w2 = ', w2)

else:

pass

I would like to confirm the parts with "Q:"

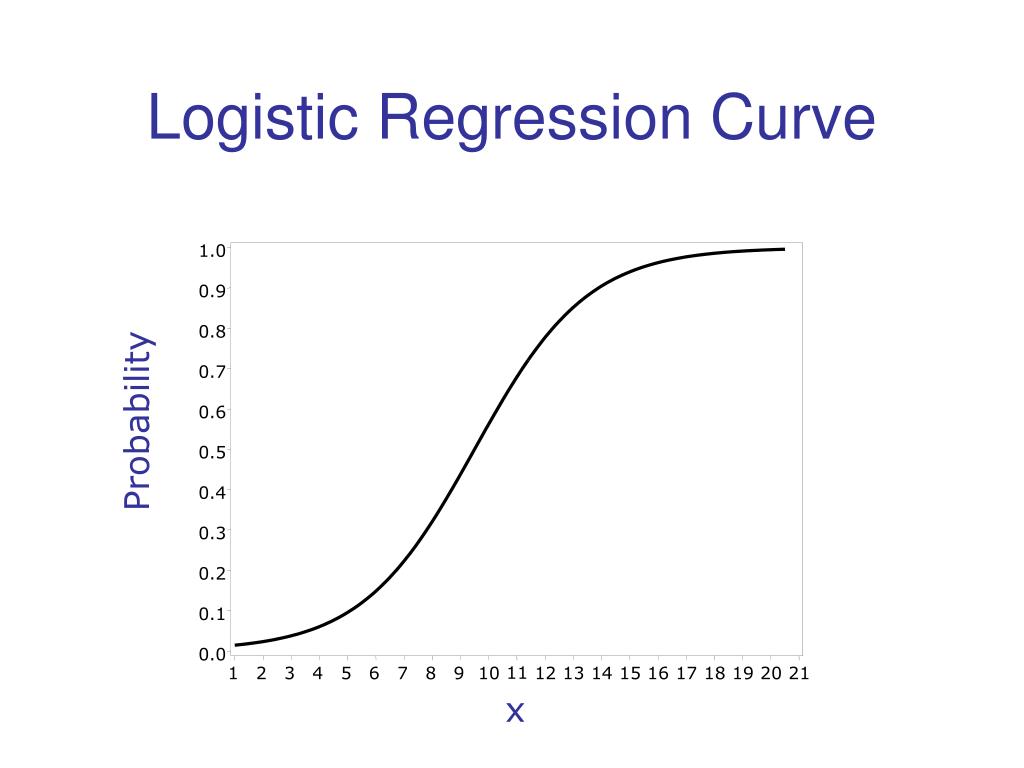

Then how would I plot this with y and x to look like a regular logistic function with tensors, similar to below, but with all the 1s and 0s in the graph?

There are multiple issues with this code.

lambda < lambda_min. BTW,lambdais a keyword and can't be used as a variable name.w2 = w1 - torch.mul(0.01, lambda_min)is not a gradient descent step:w2equal to oldw2plus some adjustment. You code sets it equal tow1(!) plus some adjustment.(1 + yy) * torch.log(1 - p_x)should be(1 - yy) * torch.log(1 - p_x)(note the "1minusyy" part).